Extended Reality (XR)

Creating Parallel Worlds & Unique Digital Experiences.

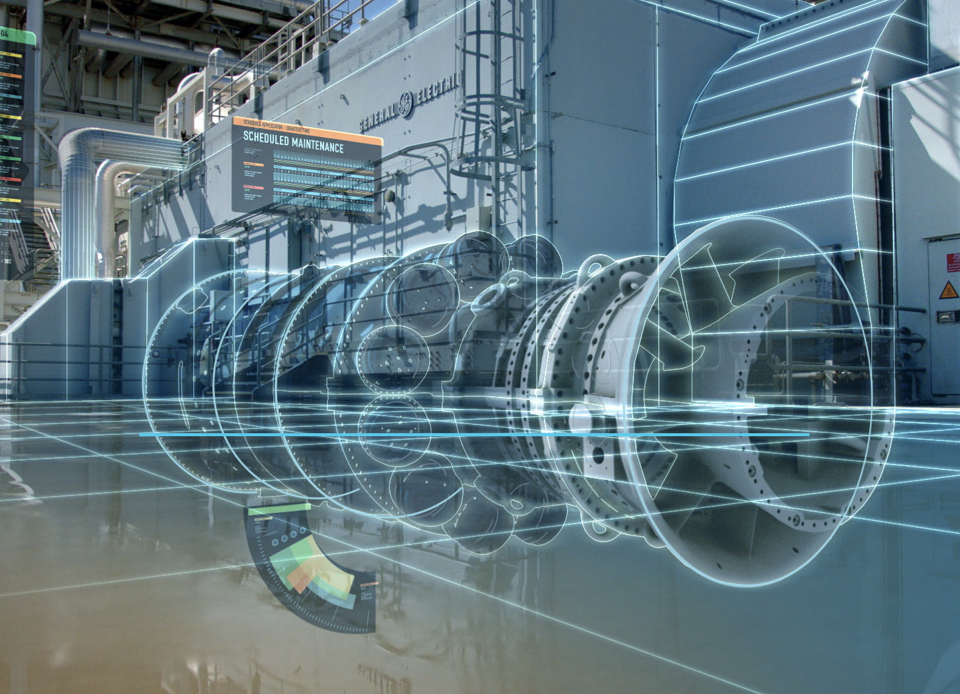

Whether your reality is virtual, augmented, blended, mixed or actual, the combination of 3D imagery interacting with Internet-Of-Things devices and data is changing how we work and play.

Extended Reality (XR) is a newly added term to the dictionary of the technical words. For now, only a few people are aware of XR. Extended Reality refers to all real-and-virtual combined environments and human-machine interactions generated by computer technology and wearables. Extended Reality includes all its descriptive forms like the Augmented Reality (AR), Virtual Reality (VR), Mixed Reality (MR).

In other words, XR can be defined as an umbrella, which brings all three Reality (AR, VR, MR) together under one term, leading to less public confusion. Extended reality provides a wide variety and vast number of levels in the Virtuality of partially sensor inputs to Immersive Virtuality.

Sovereign is at the cutting-edge of XR technologies and can help you build amazing and unique Digital Experiences.

AR / VR / MR Application Development

Create intelligent and immersive AR experiences that fully integrate with the real world.

Augmented Reality (AR)

Augmented reality is the overlaying of digitally-created content on top of the real world. Augmented reality – or ‘AR’ – allows the user to interact with both the real world and digital elements or augmentations. AR can be offered to users via headsets like Microsoft’s HoloLens, or through the video camera of a smartphone.

In both practical and experimental implementations, augmented reality can also replace or diminish the user’s perception of reality. This altered perception could include simulation of an ocular condition for medical training purposes, or gradual obstruction of reality to introduce a game world. It is worth noting that there is a point when augmented reality and virtual reality likely merge, or overlap.

Virtual Reality (VR)

A high level of VR immersion is achieved by engaging your two most prominent senses, vision and hearing, by using a VR headset and headphones. The VR headset wraps the virtual world or experience nearly to the edge of your natural vision field of view.

When you look around, you experience the environment the same as you do when you look around in real life. Headphones amplify the experience by blocking the noise around you, while allowing you to hear the sounds within the VR experience.

When you move your head, the sounds within the VR environment move around you like they would in real life.

Mixed Reality (MR)

A Mixed Reality experience is one that seamlessly blends the user’s real-world environment and digitally-created content, where both environments can coexist and interact with each other. It can often be found in VR experiences and installations and can be understood to be a continuum on which pure VR and pure AR are both found. Comparable to Immersive Entertainment / Hyper-Reality.

‘Mixed reality’ has seen very broad usage as a marketing term, and many alternative definitions co-exist today, some encompassing AR experiences, or experiences that move back and forth between VR and AR. However, the definition above is increasingly emerging as the agreed meaning of the term.

The Opportunity:

While mixed reality offers many design challenges, and much progress is needed concerning platforms that host and support it and there is a tremendous opportunity to bring a diversity of experiences and display methods to audiences through MR. That should mean more content can reach and serve a broader range of people, including those who do not find traditional VR or AR relevant to their abilities, comfort, taste or budgets.

The Best Haptic Experiences Start with Immersion

What is real-time rendering in 3D and how does it work?

3D rendering is the process of producing an image based on three-dimensional data stored on your computer. It’s also considered to be a creative process, much like photography or cinematography, because it makes use of light and ultimately produces images.

With 3D rendering, your computer graphics converts 3D wireframe models into 2D images with 3D photorealistic, or as close to reality, effects. Rendering can take from seconds to even days for a single image or frame. There are two major types of rendering in 3D and the main difference between them is the speed at which the images are calculated and processed: real-time and offline or pre-rendering.

In real-time rendering, most common in video games or interactive graphics, the 3D images are calculated at a very high speed so that it looks like the scenes, which consist of multitudes of images, occur in real time when players interact with your game.

That’s why interactivity and speed play important roles in the real-time rendering process. For example, if you want to move a character in your scene, you need to make sure that the character’s movement is updated before drawing the next frame, so that it’s displayed at the speed with which the human eye can perceive as natural movement.

The main goal is to achieve the highest possible degree of photorealism at an acceptable minimum rendering speed which is usually 24 frames/sec. That’s the minimum a human eye needs in order to create the illusion of movement.

The Best Haptic Experiences Start with Immersion

Haptics (AKA “Touch Feedback”)

Haptics simulate and stimulate the sense of touch by applying various forces – most commonly vibrations – to the user, via the likes of input devices or specific haptic wearables.

Haptics are used to lend a tangibility to an object or movement on screen. Vibrating game controllers offer the classic example, but also includes vibration delivered through a smartphone screen, and modern approaches like ultrasound speaker arrays that project textures into the air that the VR user can feel as they interact with the content.

The Opportunity:

Another way to improve VR immersion, and particularly presence in VR.

Precisely controlled device actuators create rich tactile effects and amazing digital experiences

A powerful API. High-quality performance. Easy integration. Immersion’s haptic technology gives you everything you need to create rich tactile effects across applications and hardware configurations.

TouchSense Technology: Optimized Performance for Producing the Best Haptic Experiences Possible

Create a high-end experience with a cost-effective and easy-to-integrate solution. TouchSense technology optimizes actuators and haptic driver ICs for better performance.

- 100+ pre-defined built-in tactile effects tuned to common actuator types

- Ability to add-on features to quickly introduce new capabilities to the market

- Customized use case implementation

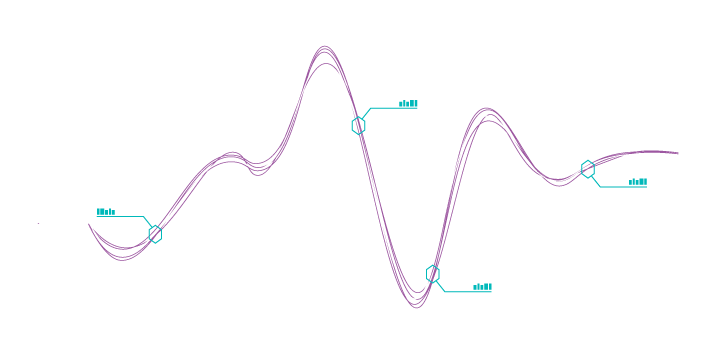

Getting the Haptic Experience Just Right

A great haptic experience depends on the haptic system, but not all components are made equal. Immersion’s Active Sensing Technology makes up for the difference of haptic hardware by recalibrating the amount of power to send to the actuator based on its performance. With Active Sensing Technology, you get great performance and consistency independent of the actuator quality.

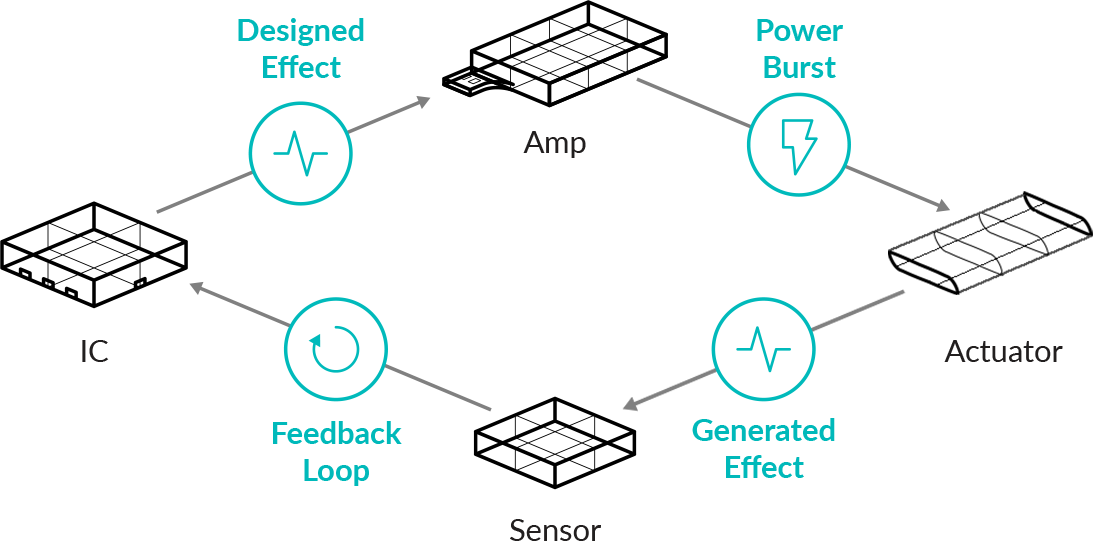

A Complex Algorithm & System Design

Active Sensing Technology achieves high-definition haptic effects by relying on a sensor to measure and send actuator acceleration data to the IC controller for real-time recalibration. Using a smart algorithm to store and process the data, the controller adjusts the drive signal’s timing and strength for more precise control of the actuator every time. Such precision means optimized drive signals and exceptional braking for crisp effects and better, more consistent performance from the actuator.

Active Sensing Technology

Smart Sensing System for Getting the Haptic Experience Just Right.

Active Sensing Technology takes haptics to the next level with motion sensing + smart control technology. Rapidly responding to the haptic system’s current state, Active Sensing Technology can realistically recreate button pushes, dial turns, and sliders. The technology gives device makers to deliver a fully interactive digital display with programmable touch surfaces.

Made for suspension mounting and whole device vibration, Active Sensing Technology can be used for automotive, mobile and IoT devices.

Interactive Touch Displays, Programmable Digital Buttons, Localized-haptics & More

High-quality Performance Through Precise Actuator Control

Precisely reproduce mechanical acceleration profiles with increased acceleration, frequency range, and strength

Improved Component Compatibility & Flexibility

Multi-source actuators and hardware components using the same system design and get comparable performance

Robust Braking

Get ultra-fast, robust braking without auto-resonance detection and reduce effects tails to undetected leves

More Consistent Performace

Equalize performance among actuators of differing grades, cost, and quality

Prime II Haptic

Real World Interactions for Virtual World Experiences

The Manus Prime II Haptic gloves are next-gen in cutting-edge haptic feedback technology. The sense of physical touch is not easily replicated, with the Prime II Haptic, precision is paramount to deliver intuitive and natural interactions with low latency between user and virtual hands. Whether holding digital objects, feeling textures, pushing buttons or pulling handles inside virtual experiences, the gloves provide a snug and comfortable fit to ensure delicate vibrations are felt across hands and fingers, with the hyper-fine feedback loop from motor control to haptics providing a powerful presence in VR. The gloves are immune to magnetic interference.

Prime II Haptic significantly reduces cost and time for teams collaborating on VR projects in motion capture, robotics, prototyping, educational VR, virtual training, and more–or take gaming experiences to a whole new level of play for VR Arcade games.

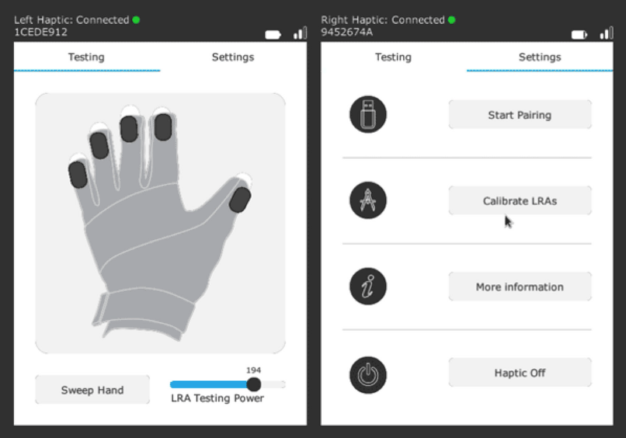

Programmable Feedback

The haptic feedback is achieved by assigning a programmable LRA haptic module to each individual finger looping back unique signals depending on the interactions with virtual objects and materials along with feedback applied inside the virtual experience.

Access the user-friendly dashboard and driver to calibrate and control the agnostics of your gloves where haptic feedback is fully customizable and is equipped with an integrated material editor, and signal strength, frequency, and resonance adjuster.

Precise Finger Tracking

Merging robustness with precision, all Prime II series gloves incorporate industrial grade flex sensors fused with high-performance inertial measurement units, raising the bar in high fidelity finger tracking. The flex sensors measure 2 joints per finger, enhanced with 11DOF tracking of individual fingers by the IMU’s to ensure fine finger movements. IMU drift is prevented with newly implemented automatic filters, enhanced with reference points of the robust flex sensors. This enables detailed finger spreading measurement without losing continuous quality during a live performance capture.

An Immersive Hepatic Experience

Submerge yourself into the most powerful, high-fidelity haptic experience. The Vest Edge gives you 360 degrees of immersion, delivering powerful, accurate and detailed sensations. It’s the perfect companion for at-home gaming, movies, VR and music. It’ll pump the low frequencies through your body, delivering a unique and mesmerizing audio experience.

We can tune everything from vibration magnitude and duration to frequency and rhythm. Creating a haptic experience that’s uniquely yours.

Motion Capture for Sport, Ergonomics, Motion Analysis, Human Machine Interaction (HMI) or Gait Analysis

Motion Capture for Research, Ergonomics and Sport

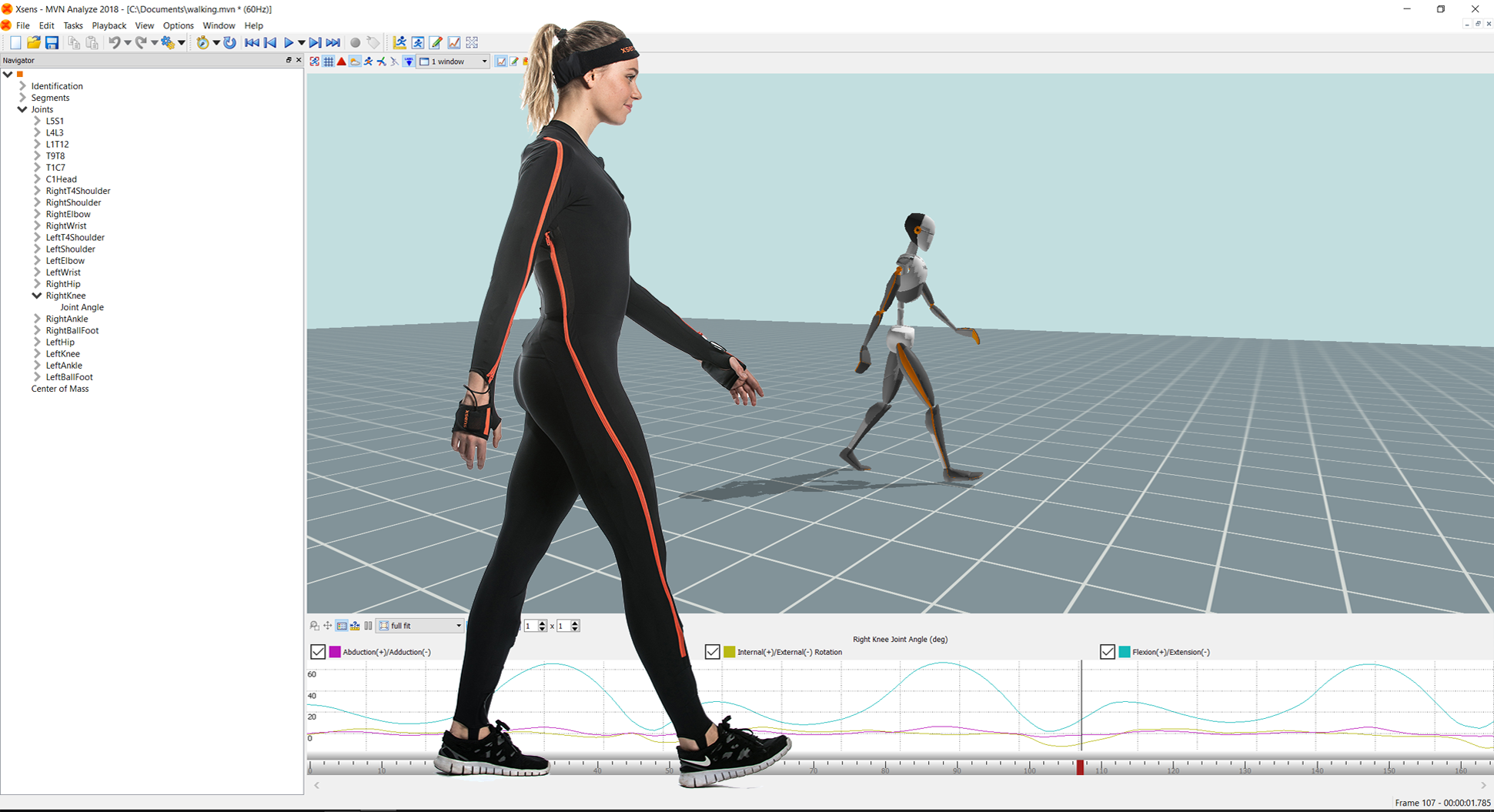

Xsens motion analysis technology is available in full-body 3D kinematics solutions & 3D motion trackers to integrate in your real-time applications.

Motion Capture for Animation and VFX

Xsens motion capture solutions are unmatched in ease-of-use, robustness and reliability. Xsens produces production-ready data and is the ideal tool for animators.

What is Motion Capture?

Motion Capture (also referred to as mo-cap or mocap) is the process of digitally record the movement of people. It is used in entertainment, sports, medical applications, ergonomics and robotics. In film-making and game development, it refers to recording actions of actors for animations or visual effects. A famous example of a movie with lots of motion capture technology is Avatar. When it includes full body, face and fingers or captures subtle expressions, it is also referred to as performance capture.

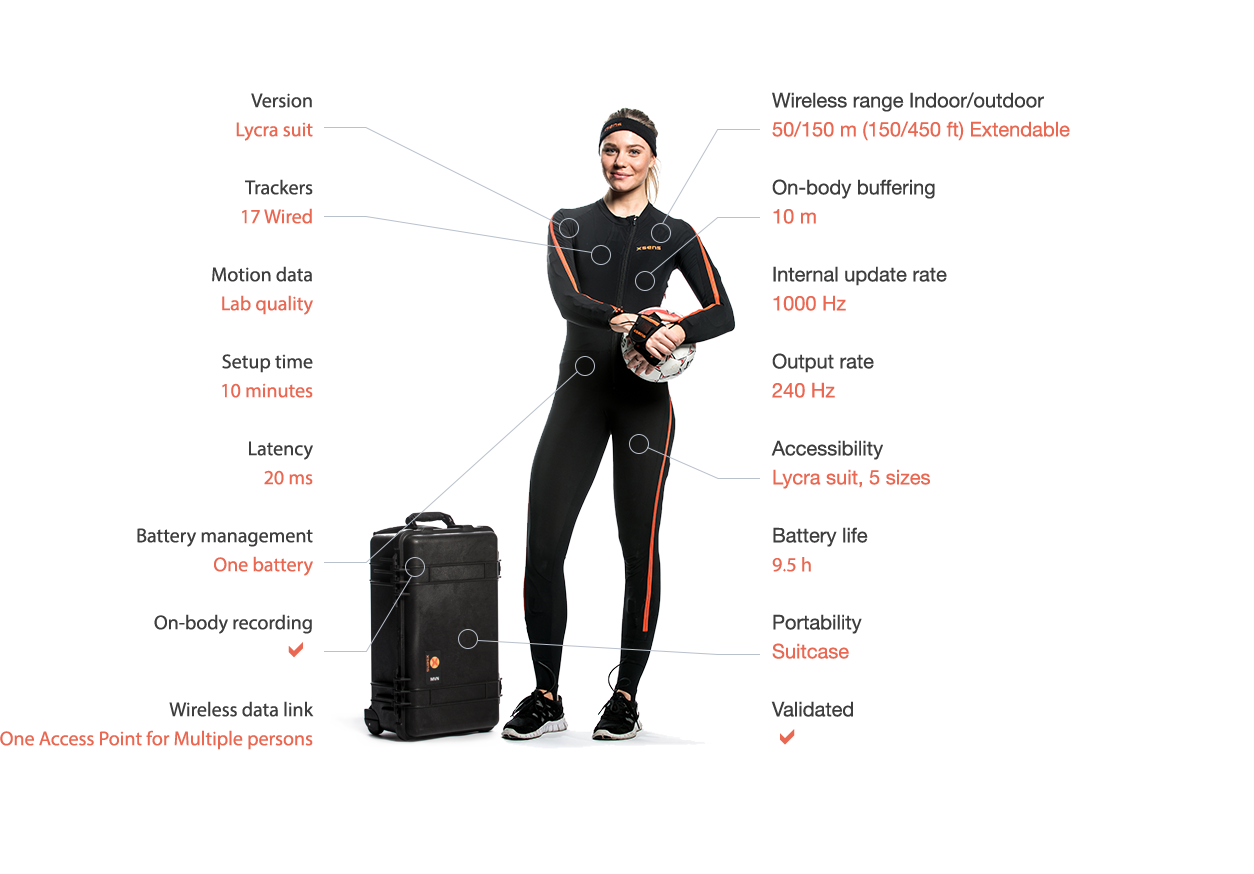

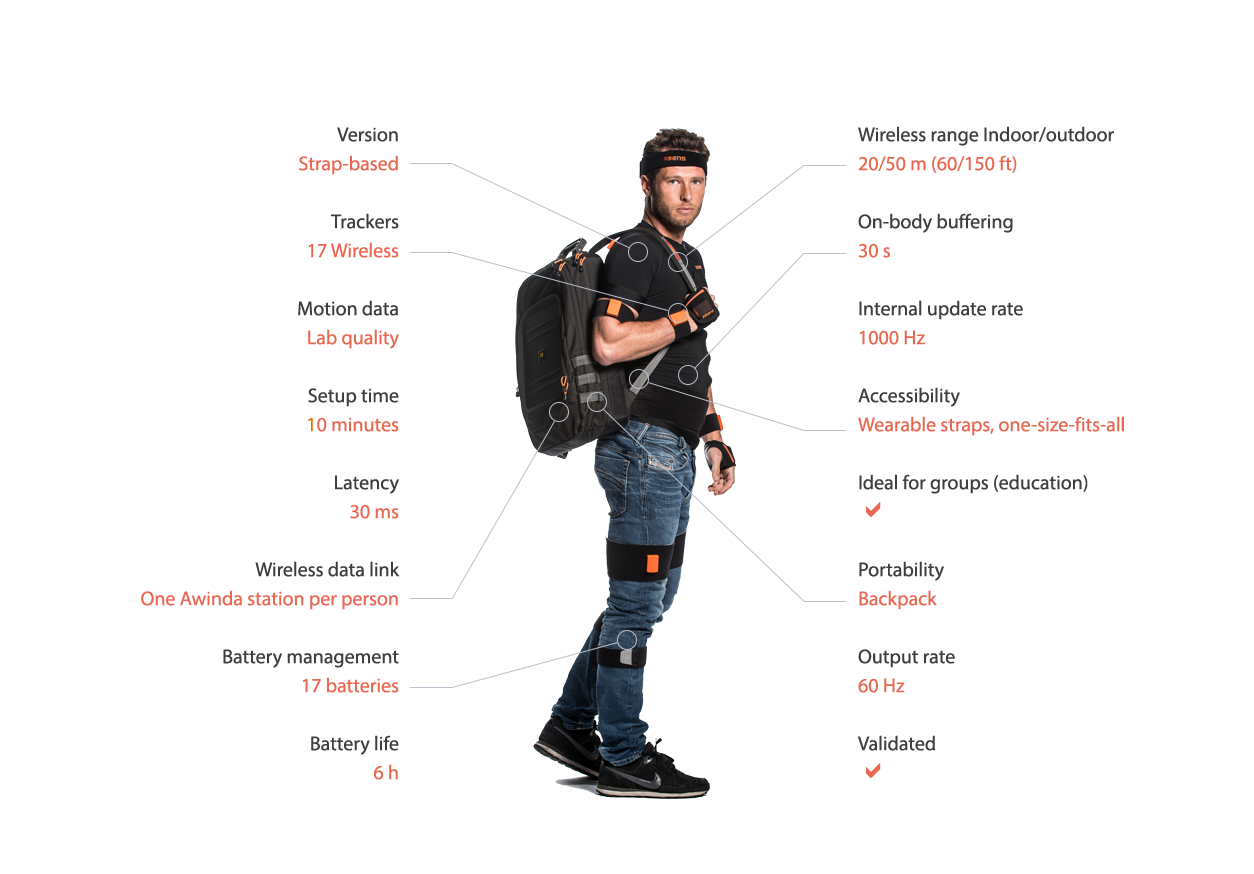

Xsens motion capture is available in two versions:

Motion Capture Software and Hardware

Xsens provides proprietary motion capture software to use in conjunction with Xsens motion capture hardware. The software is used to record, monitor and review movement. Users can capture anywhere, in any environment, with total reliability. Xsens motion capture software is available in two versions:

- MVN Animate for Animation and VFX

- MVN Analyze for Research, Ergonomics and Sport

Motion capture with Xsens can be done in two hardware versions, the MVN Link suit and the MVN Awinda straps, both with their own characteristics.

Interested in what kind of Motion Capture hardware is available? Have a look at the history of motion capture page or contact us.

Optical and Inertial Motion Capture

To understand the difference between Optical Motion Capture and Inertial Motion Capture we created a landing page with all the information about:

Motion Capture for Research, Ergonomics and Sport

MVN Analyze is a full-body human measurement system based on inertial sensors, bio-mechanical models, and sensor fusion algorithms. Easy to use, short setup time and instant validated data output. MVN Analyze is optimized for:

- Sports Science

- Ergonomics & Human factors

- Motion analysis

- Human Machine Interaction

- Gait analysis

Sports Science

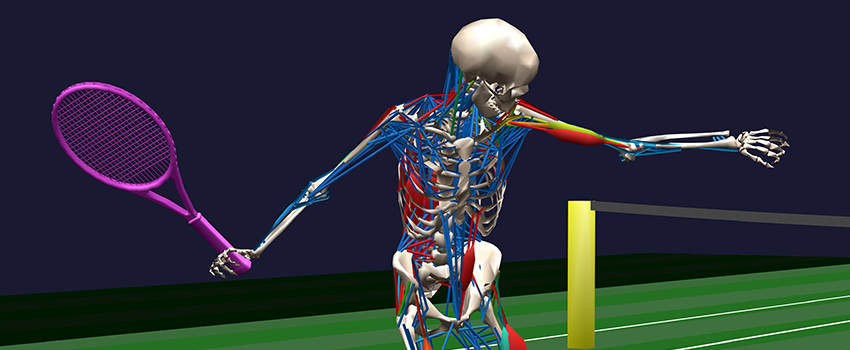

Sports Biomechanics or Sport Bio-mechanical analysis is the scientific discipline in Sport Science which studies (bio) mechanical parameters of the human movement during sports. It is based on analysis of professional athletes and sports’ activities in general, also called the Physics of sports. These studies are performed to gain greater understanding of athletic performance through measuring the human body kinematics.

Below you will find more information about:

- Sports science in general

- Sports science with inertial sensor technology

- Applications, customer cases and products for sports science

Motion Analysis

Human movement or motion analysis is the observation and definition of movements of humans. Movement analysis is often carried out in a laboratory. Simple analysis can involve simple observations. Advanced analysis often involves some form of technology, for example high speed, or optical / optoelectronic cameras to generate the kinematics needed for analysis. Often force plates and/ or electromyography can be combined to provide complete information.

Human Machine Interaction

Human Machine Interaction is a multidisciplinary field with contributions from Human-Computer interaction (HCI), Human-Robot Interaction (HRI), Robotics, Artificial Intelligence (AI), Humanoid robots and exoskeleton control.

Informing Musculoskeletal models with Xsens

Enabling next-level biomechanics

The measurement of individual muscle forces, ligament forces and internal joint contact forces is essential to understand the mechanical mechanisms of human movement. However, such quantities cannot be measured without invasive methods, which has led to the development of computational musculoskeletal models. These musculoskeletal models have allowed researchers to estimate parameters difficult to measure non-invasively and investigate a wide variety of topics.

Motion Capture for Animation and VFX

MVN Animate is unmatched in ease-of-use, has robust and reliable hardware and produces production quality data. Xsens MVN is the ideal tool for professional animators. MVN Animate is optimized for:

- Film and VFX

- Game Development

- Live Entertainment

- Virtual Youtuber or Vtuber

- Advertising

- VR, AR and MR

Visual Effects

Using Xsens MVN Animate, both large and small studios can cost-effectively animate CG characters, recording accurate full-body motion data without the need for an optic camera setup.

No limits

Capture clean full-body motion in any environment. In the studio, on location, or anywhere!

CG Characters, no cleanup

Use raw data to create realistic CG animations and breathe life-like motion into your character rigging.

Plugs into your workflow

Xsens seamlessly integrates with Unity, Unreal, Autodesk Maya, and much more.

Find our more about our VFX Solution

Game Development

Using Xsens MVN Animate, studios of all sizes can cost-effectively speed up the production of 3D character animation for games and in-game cinematics with inertial motion capture.

Motion, Captured Anywhere

Capture clean character animation in any environment. In the studio, office, or outside.

Works with Game Engines

MVN Animate can stream data straight into Unity or Unreal engine.

VR, AR, and MR

Using Xsens MVN Animate, studios of all sizes can enhance the production of 3D character animation for virtual reality, augmented reality, and mixed reality mediums using inertial motion capture.

Motion, Captured Anywhere

Capture clean character animation in any environment. In the studio, office, or outside.

Works with Game Engines

Xsens MVN Animate can stream data straight into Unity or Unreal engine.

Interactive experiences

Create realistic experiences with animation data that audiences can interact with.

Find out more about our VR, AR and MR Solution

Motion Capture / Motion Control Hardware

MVN Link

The MVN Link operates on the same 17 trackers as the MVN Awinda, but the Lycra suit ensures even more accurate data recording and allows for a bigger recording range. Additionally, the MVN Link features full GNSS support.

MVN Awinda

The MVN Awinda allows for fast, easy and reliable motion capture. The adjustable straps, quick setup time and portabilty make the MVN Awinda ideal for use in groups and education.

Design Highlights

Rugged design

The ultra-small trackers are rugged and designed to withstand high impact.

Perfect for rolls and stunts.

Sportive look

The motion capture suit has great sportive design and comes with matching shorts.

Fast setup

The on-body zippers enable easy access to all trackers and allow for quick setup.

Magnetic Immunity

Experience the benefits of full magnetic immunity in all conditions. Proven sensor fusion algorithms ensure the highest quality motion capture. Even in the most challenging magnetically disturbed environments, Xsens MVN Animate provides you with production quality mocap data.

Manus Finger Tracking

Xsens offers true full body performance capture using Manus VR finger tracking for MVN Link and MVN Awinda. We provide native support for Manus VR gloves directly into Xsens’ MVN Animate motion capture software.

The Prime Xsens is a glove designed for integration into an Xsens motion capture suit, to complete the hand with finger data on their IMU suits. Xsens is the leading innovator in 3D motion tracking technology and products. The Xsens suit, in combination with the Manus Prime Xsens enables seamless interaction in applications such as 3D character animation, motion analysis, and industrial control & stabilization.

Polygon

High-end natural motion and full-body tracking in VR

MANUS Polygon is the latest in real-time software to enable fluid full-body motion within any virtual environment. The solution harnesses inverse kinematics to dynamically reproduce natural body movements via 6 data points. The IK-system generates an accurately proportioned virtual human skeleton, and seamlessly re-targets and synchronizes the users’ motion to the bones of the chosen avatar. This system detects the finest nuances in human body motion resulting in truly realistic virtual characters, without the need for a motion-capture suit.

Fast Autonomous Calibration

Unique to Polygon is the Self-calibrate tool. Designed for speed and maximum ease-of-use, the quick 7-step 45-second calibration process allows the user to operate with full autonomy. Polygon ensures speedy set-up as it automatically assigns data points to corresponding trackers delivering a full-body avatar within seconds.

Any Character, Any Virtual Setting

Polygon provides a reliable and cost-effective solution for creators of VR projects and global team collaborations within any given virtual environment. Virtual characters interact with greater realism and natural whole-body motion, enabling participants a deeper sense of immersion and more intuitive experiences. The software supports both individuals and multiple users during various VR experiences, training and simulation sessions, and global VR team collaborations.

Digital Innovation Delivered!

We design, build and deliver technology-based innovations that create significant competitive advantage and revenue growth potential for our clients.