USAF Smart Helmet

Connected Modular Warfare Systems (CMWS)

The U.S. Air Force’s Connected Modular Warfare System, or CMWS, is an augmented reality device giving the foot soldier next-generation night vision, navigation, targeting and a whole lot more. The CMWS includes a militarized Microsoft HoloLens-like display, weapon-mounted sight, night-vision sensors and AI-powered central computer.

Together, these allow the soldier to navigate without having to look down, see in the dark, and shoot around corners or over walls using the camera on their rifle. That is useful, but adding communications and sharing data takes it to another level: a multiplayer team game rather than single player.

CMWS displays the location of all friendly troops in the area, regardless of obstacles, as well as opposing forces. Users can tag locations and drop markers, for example to note a possible IED location or rendezvous point. Anyone with goggles can see everything in their area just by turning their head.

Artificial Intelligence + Sensor Fusion

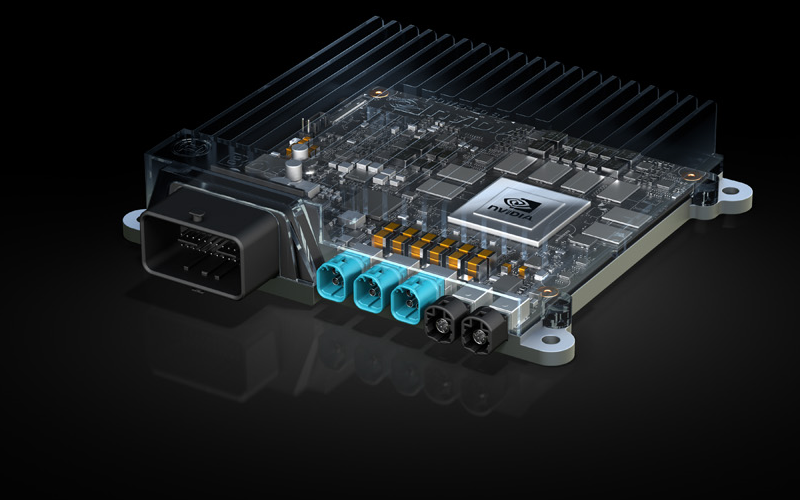

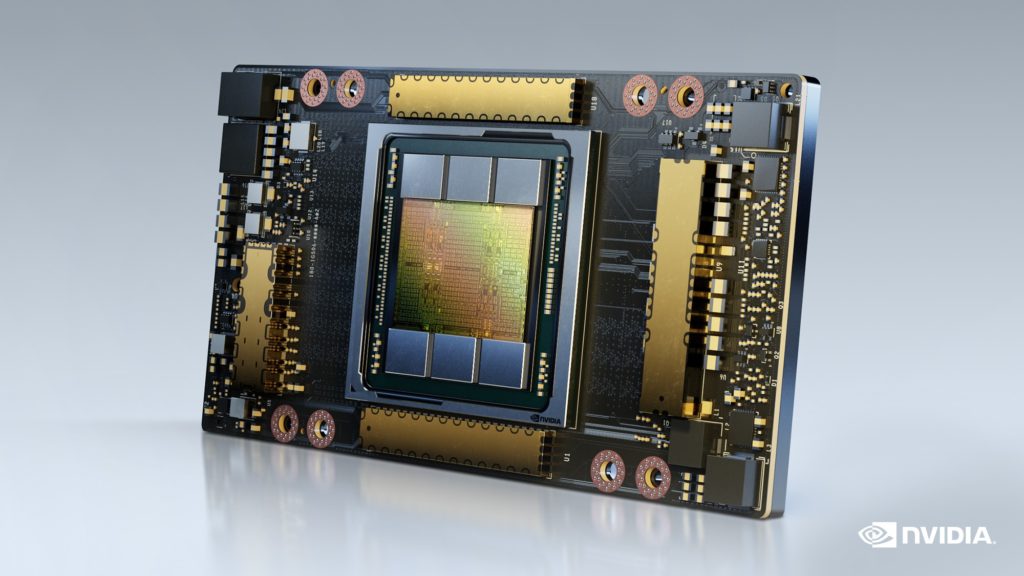

The World’s Smallest AI Supercomputer for Embedded and Edge Systems.

NVIDIA Jetson AGX Xavier brings supercomputer performance to the edge in a small form factor system-on-module (SOM). Up to 32 TOPS of accelerated computing delivers the horsepower to run modern neural networks in parallel and process data from multiple high-resolution sensors—a requirement for full AI systems.

Jetson AGX Xavier has the performance to handle visual odometry, sensor fusion, localization and mapping, obstacle detection, and path planning algorithms critical to next-generation robots.

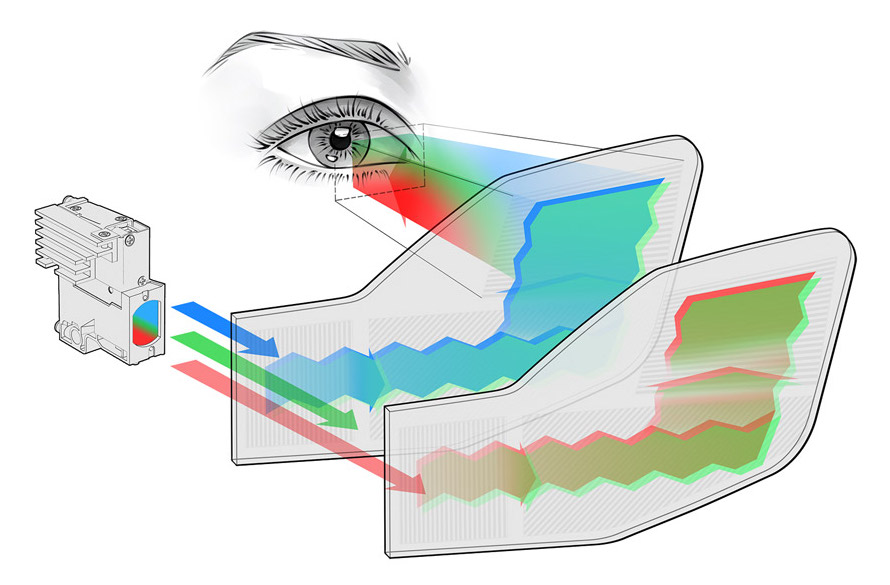

Human-Machine Interface (HMI)

Next-Generation HUD + Full-Spectrum Informational Warfare

Imagine wrap around ballistic goggles that allow full color night vision fused with thermal technology. Integrated within the lens of the goggle is a heads-up display with an information overlay displaying speed, direction, navigational routes, mapping software, communications, your friends and foes, targeting, aiming integration and more.

Crystal50:

Optical Waveguides

Designed with DigiLens’ breakthrough architecture, the 50º diagonal waveguide lens minimizes form factor while expanding the overall field-of-view. This new waveguide display breakthrough architecture allows multiple diffractive optical structures to be multiplexed, enabling the optical path in the transparent display to be integrated and compressed.

World’s Smallest Wide Field of View 1080p Optical Engine

Optical Display Engine

An ultra-compact, high brightness 1080p optical engine reference design that fits into a volume under 3 cubic centimeters (3cc). Specifically optimized for CP’s 0.26” diagonal, 1920 x 1080 resolution, 3.015μm pixel, all-in-one Integrated Display Module (IDM) package, this optical engine design enables Sovereign’s system designers to rapidly develop AR/MR smart glasses that deliver naturalistic visual experiences in more ergonomic form factors approaching regular consumer eyewear.

The CP1080p has shown up to 2x brightness vs. other engine designs when projected into the new generation of 50°+ wide field of view (WFOV) waveguides.

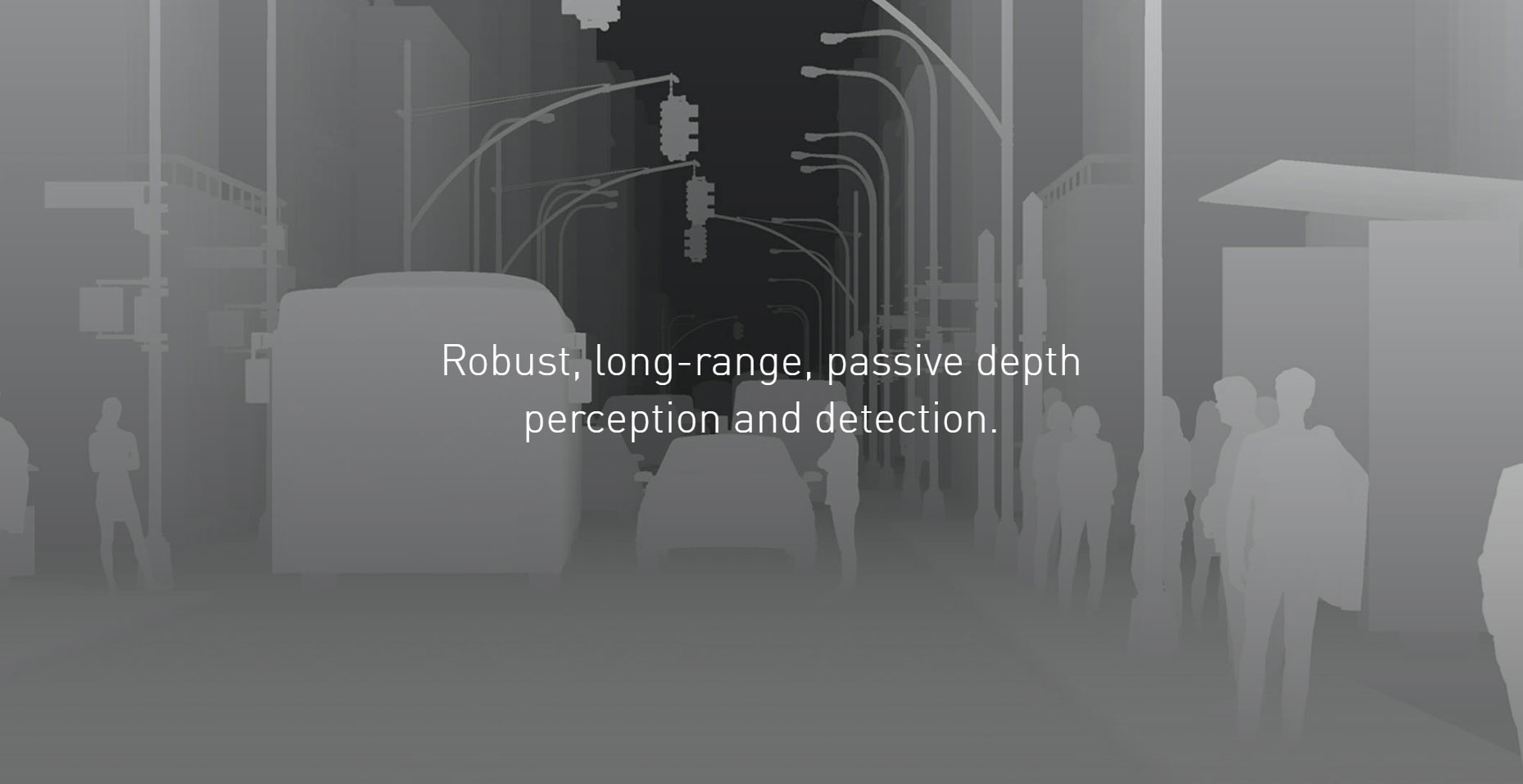

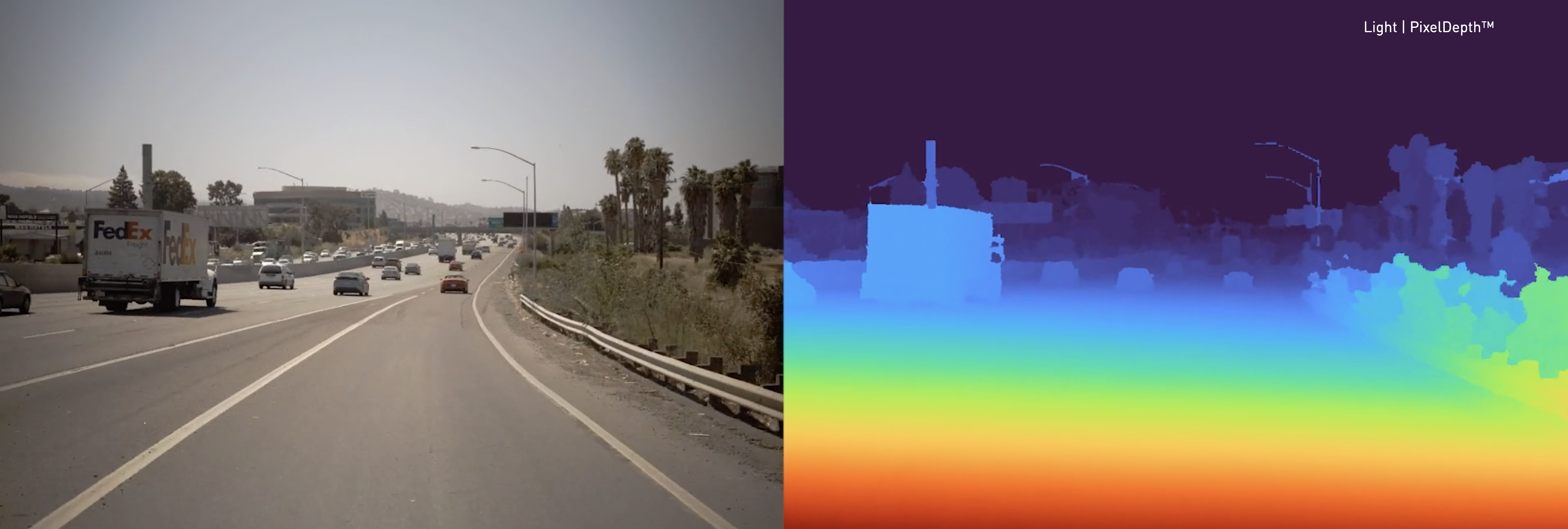

A Breakthrough In Depth-Perception

Human-Like Vision and Deep Perception

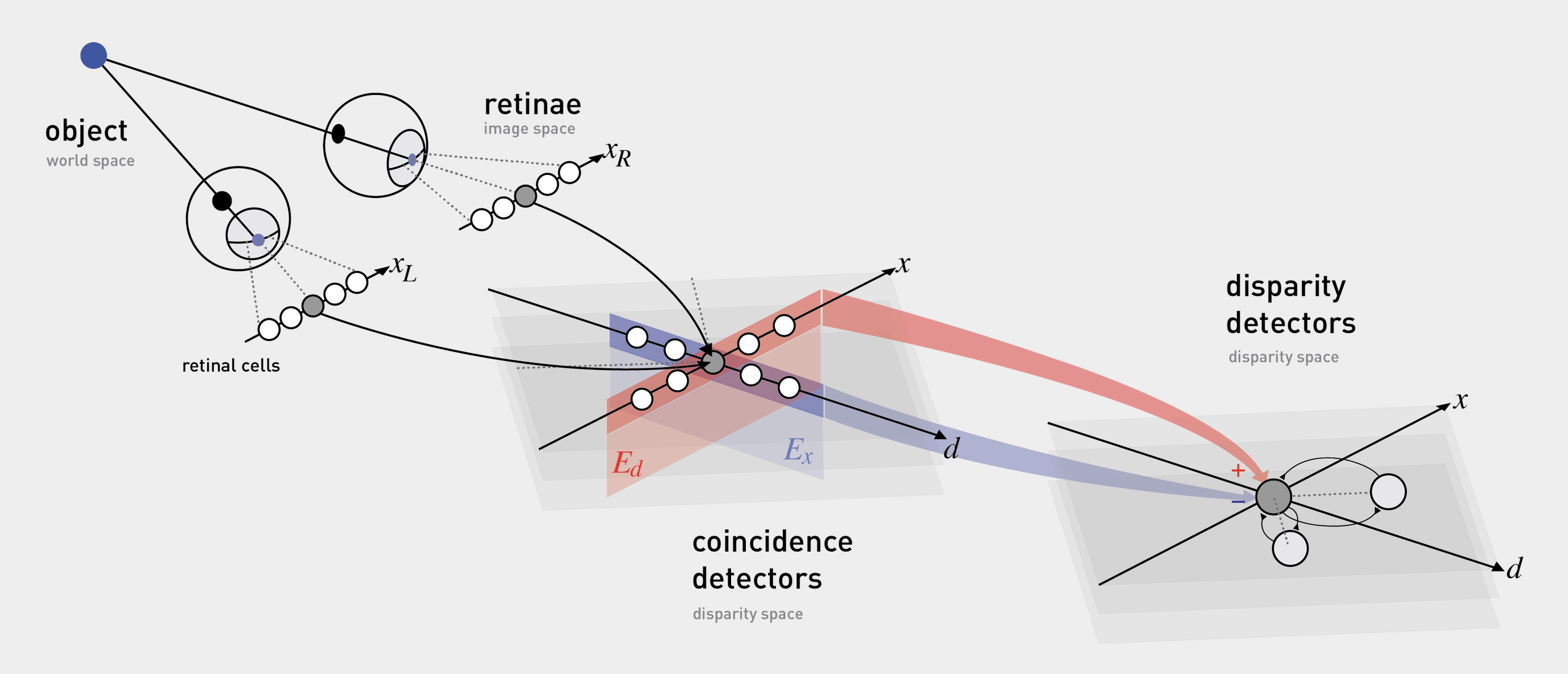

The human visual system has evolved to perceive a range of environments, from the tip of one’s nose to, under the right conditions, detecting candle light nearly 3 kilometers away. And through the use of stereopsis, images from both eyes are combined into a coherent 3D representation of the world — a critical step when performing perception tasks like driving.

Like the human eye, a camera can capture significant scene information from photons alone. With two or more cameras pointed in the same direction, each camera captures the scene from a slightly different perspective. Like stereopsis in humans, machines can use parallax, or the differences between these perspectives, to compute depth inside the field of view overlap of the camera images.

By providing accurate, dense depth, subsequent processing such as object detection, tracking, classification, and segmentation can be significantly improved — hallmarks of perception, whether human or machine — leading to superior scene understanding.

Beyond Human Vision

The human visual system provides an incredible, general-purpose solution to sensing and perception. But that does not mean it cannot be improved upon.

Humans have two eyes, on a single plane, with a relatively small distance separating each eye. Unlike humans, a camera array is not limited to only two apertures, a specific focal length or resolution, or even a single, horizontal baseline. By using more cameras, with wider and multiple baselines, a multi view array can provide better scene depth than the unaided human eye. Cameras can also be easily modified for a given scenario, providing optimal depth accuracy and precision for a respective use case or application.

Cameras that see what is invisible to the human eye, such as Long-wave Infrared (LWIR) or Short-wave Infrared (SWIR), can also provide better-than-human capabilities. As with visible spectrum cameras, their non-visible counterparts can be used in a camera array with similar depth perception benefits.

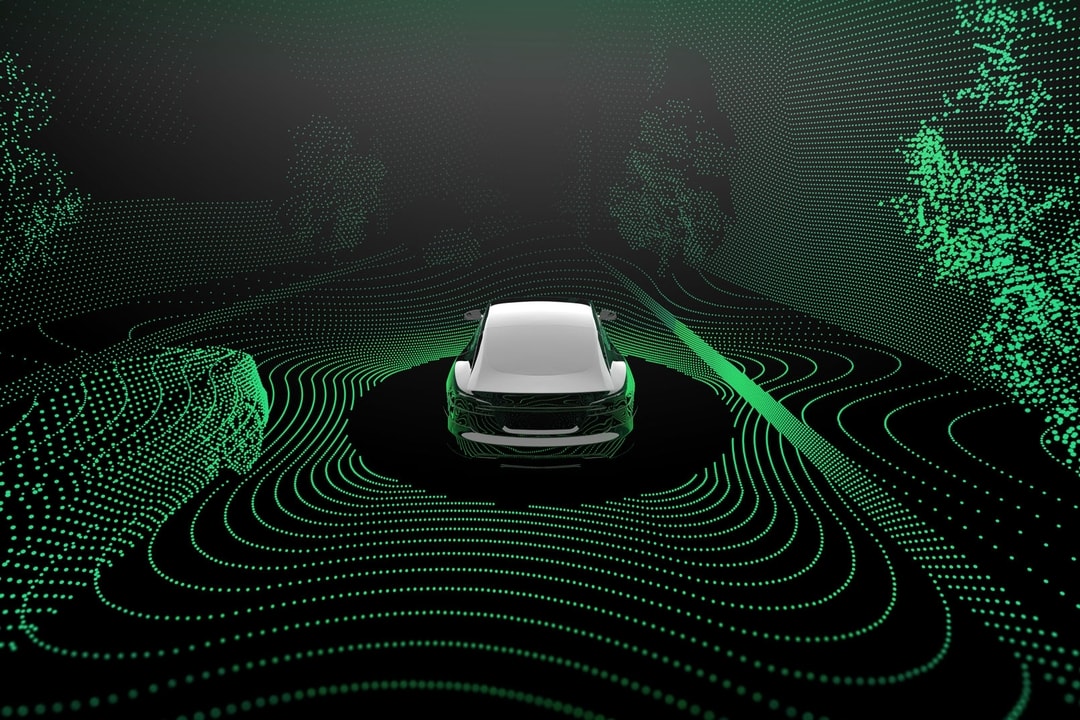

Light-Field Camera Array

Clarity is a camera-based perception platform that’s able to see any 3D people, objects or structures on the battlefield from 10 centimeters to 1000 meters away — three times the distance of the best-in-class lidar with 20 times the detail.

With this type of range, a solider is able to perceive depth further than ever before so they can make proactive decisions for target identification, acquisition and engagement. Near and long-range depth information is required to make proactive, rather than reactive, decisions.

95+ million points per second

10CM – 1000+M RANGE

MEASURED — NOT INFERRED

CONFIGURABLE FOV

NO ADDITIONAL FUSION REQUIRED

RUGGED & RELIABLE

CAMERA ONLY

Multi-view perception is essential for solving scene understanding at scale

Active sensing modalities pose challenges

Scenes must be measured for true understanding

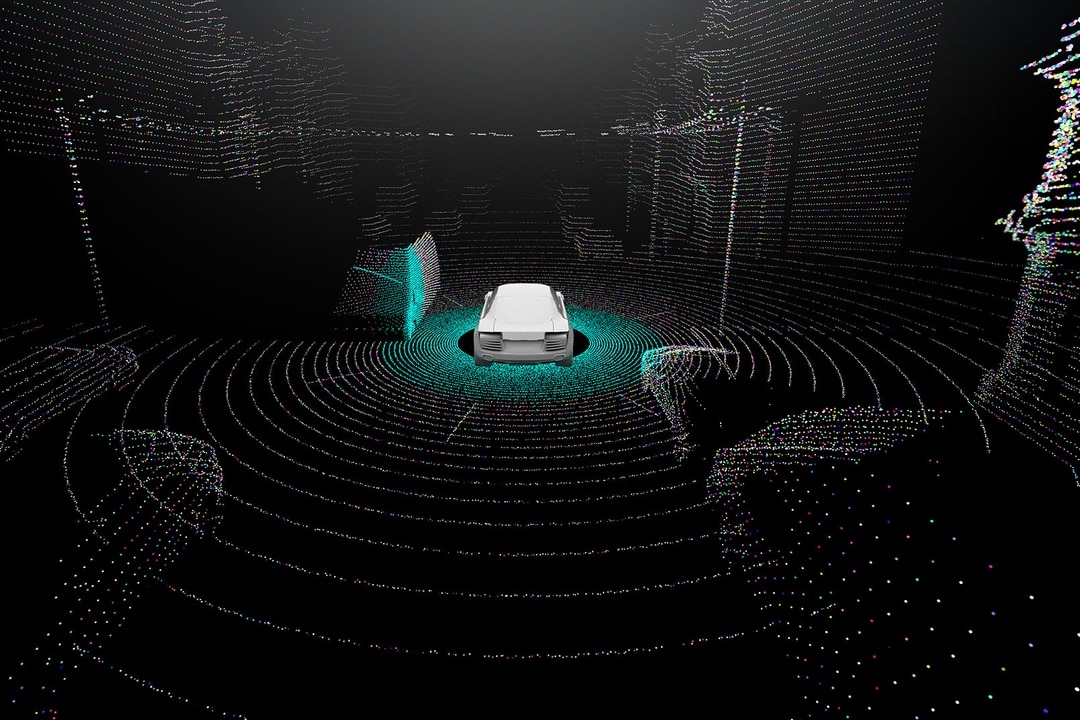

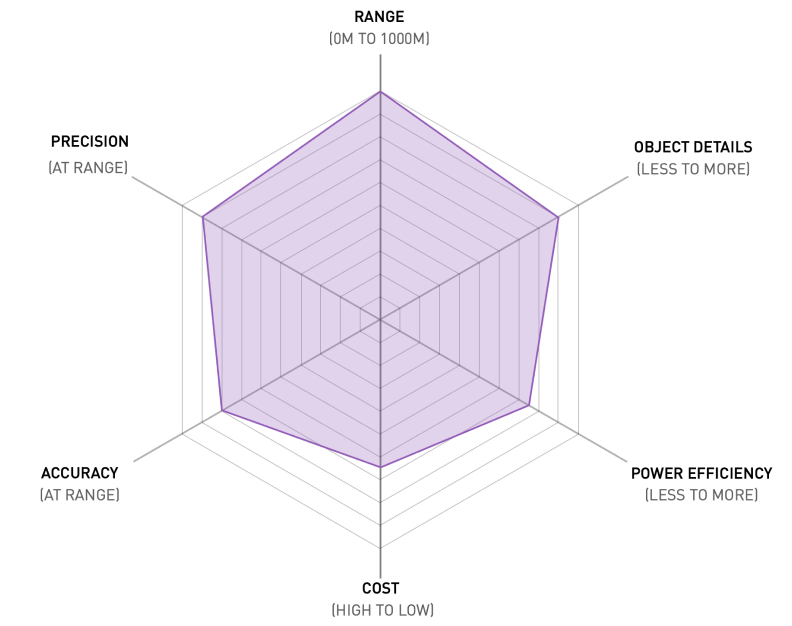

Lidar

A popular technology for depth sensing in many markets comes with several shortcomings, many of which are exacerbated at long range.

Shortcomings

- Limited resolution

- Active scanning

- Interference

- Eye & equipment safety

- Cost

- Manufacturability

Radar

Touted to be an all-weather solution with conditions such as temperature and humidity having no effect, it’s capabilities are limited.

Shortcomings

- Lower horizontal & vertical resolution

- Sensor fusion for object recognition & classification

- Small or static object detection

- Multiple object detection

- Interference

- Active scanning

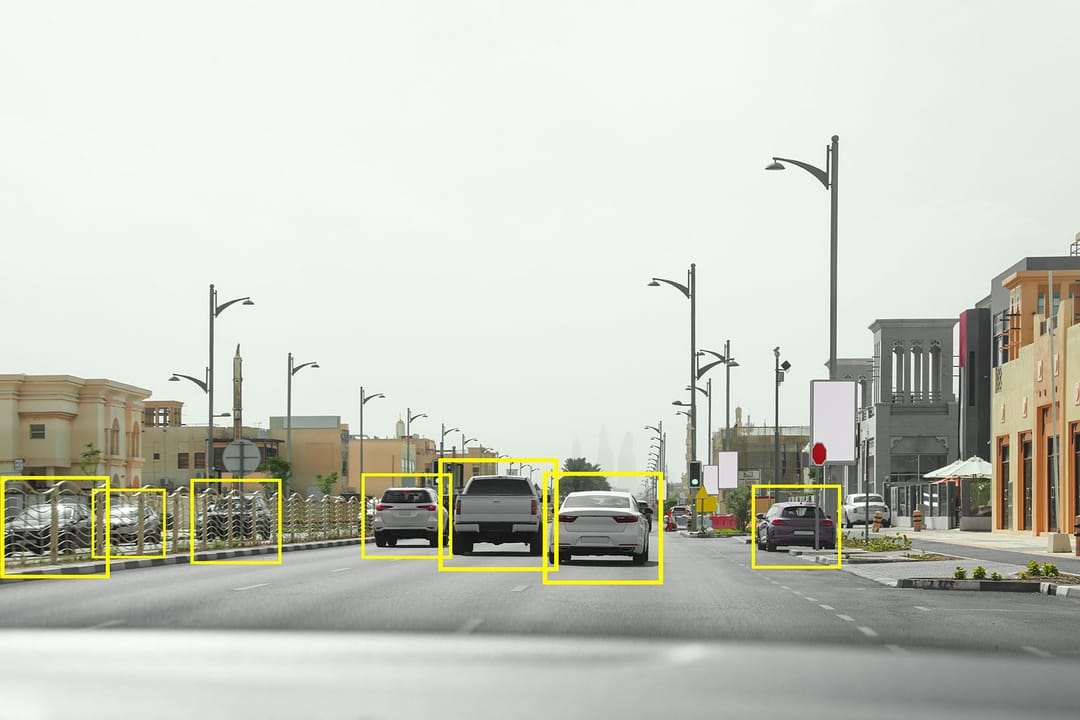

Monocular camera with inferencing

Monocular vision systems often utilize AI inferencing to segment, detect, and track objects, as well as determine scene depth. But they are only as good as the data used to train the AI models, and are often used in-conjunction with other sensor modalities or HD maps to improve accuracy, precision, and reliability. Despite such efforts, “long tail” edge and corner cases remain unresolved for many commercial applications relying on monocular perception.

Shortcomings

- Limited depth precision & accuracy

- High and unpredictable failure probability

A breakthrough in precise and accurate depth perception

Advantages

- Advanced signal processing for reliable, physics-based depth

- Native fusion of image and depth across the entire camera field of view

- Unparalleled accuracy and precision throughout the operating range

- Enables improved detection, tracking, and velocity for near, mid, and long-range objects

- High-quality, robust multi view calibration

- Able to detect horizontal and vertical edges

- Low power, self-contained processing by way of custom Light silicon

- Leverages existing, high volume, automotive grade camera components

Full-Spectrum Warfare

Integrated Thermal Imaging

An alternative to night vision is a thermal imager. Instead of searching for light to magnify, a thermal imager detects infrared radiation by way of microbolometers that change resistance based on their temperature. This change in resistance can be measured and converted into a viewable image by thousands of microbolometer pixels. All objects emit some level of thermal infrared light; the hotter an object is, the more radiation it emits and the more that light will change the resistance of each bolometer.

Boson longwave infrared (LWIR)

Integrated LWIR -Thermal Camera Core

The Boson® longwave infrared (LWIR) thermal camera module sets a new standard for size, weight, power, and performance (SWaP). It utilizes FLIR infrared video processing architecture to enable advanced image processing and several industry-standard communication interfaces while keeping power consumption low. The 12 µm pitch Vanadium Oxide (VOx) uncooled detector comes in two resolutions – 640 x 512 or 320 x 256. It is available with multiple lens configurations, adding flexibility to integration programs.

REVOLUTIONARY SIZE, WEIGHT & POWER (SWAP)

A full-featured VGA thermal camera module just 7.5 grams and less than 4.9 cm³

POWERFUL FLIR XIR VIDEO PROCESSING

FLIR’s expandable infrared (XIR) video processing with embedded industry-standard interfaces empowers advanced processing and analytics in an industry-leading system-on-a-chip.

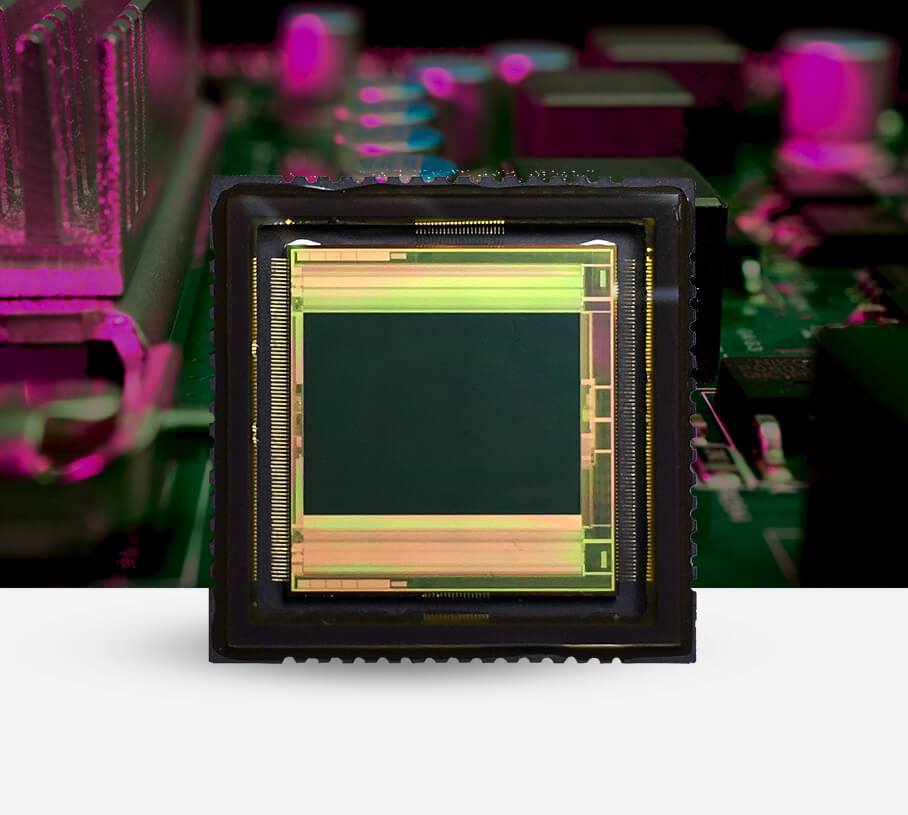

Digital Color Night Vision

Digital night vision devices operate differently than analog devices, in that light entering the objective lens is transformed into a digital signal by an image sensor of either the Complementary Metal Oxide Semiconductor sensor (CMOS), or Charge Coupled Device (CCD) variety.

The digital image is enhanced several times before being viewable on the devices display. The larger the CMOS or CCD sensor pixel size, the better it will perform in reduced light. SiOnyx, as an example, has patented technology that enhances sensitivity to near infrared (NIR) wavelengths and therefore provides greater low light performance. Its CMOS sensors produce extremely good low light performance thanks to a combination of its patented technology and a much larger pixel. Currently, the company’s most sensitive sensor is the XQE-1310, producing an impressive 1.3 mega pixels to collect incoming light.

360° Active Sensing Platform

Passive Acoustic Noise Detection Array + AI-Based Signal Processing

Active Shooter & Threat Detection

Both trained snipers and enemy shooters in Iraq and Afghanistan threaten the safety of our troops and the successful completion of their missions and are the second greatest cause of fatalities in combat. Troops in noisy military vehicles often don’t even know they are being shot at until some- thing—or someone—nearby is hit.

The Passive Acoustic Noise Detection Array (PANDA) system operates when the solider is stationary or moving, using a multiple helmet-mounted, compact arrays of microphones.

PANDA detects small arms fire traveling toward the solider’s position for bullet trajectories passing within approximately 30 meters.

Incoming round detection is determined in under a second. Significant efforts have been implemented to prevent system false alarms caused by non-ballistic events such as road bumps, door slams, wind noise, tactical radio transmissions, and extraneous noise events (vehicle traffic, firecrackers, and urban activity).

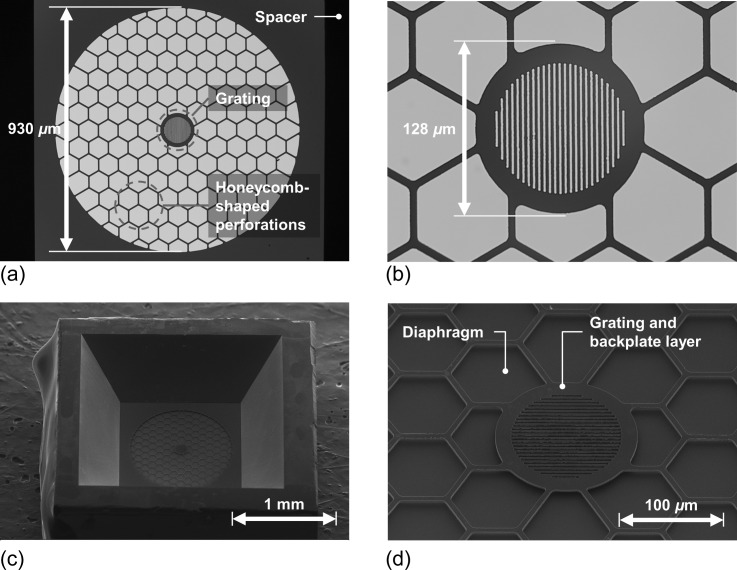

Infineon XENSIV™ – MEMS Microphone Array

Infineon’s MEMS microphones introduce a new performance class for digital MEMS microphones that overcome existing audio chain limitations. IM69D130 is designed for applications where low self-noise (high SNR), wide dynamic range, low distortions and a high acoustic overload point are required.

Dual Backplate MEMS Technology

Infineon’s dual backplate MEMS technology is based on a miniaturized symmetrical microphone design, similar as utilized in studio condenser microphones, and results in high linearity of the output signal within a dynamic range of 105 dB. The microphone noise floor is at 25 dB[A] (69 dB[A] SNR) and distortion does not exceed 1 percent even at sound pressure levels of 128 dB SPL (AOP 130 dB SPL). The flat frequency response (28 Hz low-frequency roll-off) and tight manufacturing tolerance result in close phase matching of the microphones, which is important for multi-microphone (array) applications.

Gunshot Detection Technology (Artificial Intelligence + Machine Learning)

A patented system of sensors, algorithms and AI accurately detects, locates and alerts soliders to gunfire.

Accurately detecting and precisely locating gunshots is a challenging problem given the many obstacles of buildings, trees, echoes, wind, rain and battlefield noise.

Investments in algorithms, artificial intelligence, machine learning and more recently deep learning have enabled us to continuously improve our ability to accurately classify gunshots.

ISOLATION & DETECTION

First, highly-specialized AI-based algorithms analyze audio signals for potential gunshots.

- The software filters out ambient background noise, such as traffic or wind, and listens for impulsive sounds characteristic of gunfire; we call these pulses.

- If the sensor detects a pulse, it extracts pulse features from the waveform, such as sharpness, strength, duration and decay time.

- If at least three sensors detect a pulse that is believed to be a gunshot, the sensor then sends a small data packet to the AI Controller where multilateration is used based on time difference of arrival and angle of arrival of the sound to determine a precise location.

CLASSIFICATION & LOCATION

After the software determines the location of the sound source, it analyzes the pulse features to determine if the sound is likely to be gunfire.

- To evaluate and classify the sound, algorithms consider the distance from the sound source, pattern matching and other heuristic methods.

- The machine classifier compares the sound to the large database of known gunfire and other impulsive battlefield sounds to determine if it is gunfire.

- PANDA utilizes patented microphone arrays. With 12 interconnected microphone elements, the PANDA sensor units are able to establish azimuth and elevation to the target in real-time using advanced digital signal processing.

ALERT & NOTIFICATION

The system pushes the alert notification to the Solider’s HUD.

- Incident notifications are triggered when the incident is confirmed as gunfire.

- Gunfire alerts are pushed to all team member HUDs as well as Command & Control.

- When a detection is made, the solider will receive both audio and visual warnings. During a detection event, the Heads-Up Display (HUD) will display a map or satellite image as well as a visual identification marker of the detected shooter’s location.

- The entire transaction from initial gunfire to alert takes place in less than 03 seconds.

Digital Innovation Delivered!

We design, build and deliver technology-based innovations that create significant competitive advantage and revenue growth potential for our clients.